There are also 320 texture units on board, and 96 ROPs, which is right in-line with the GP102.

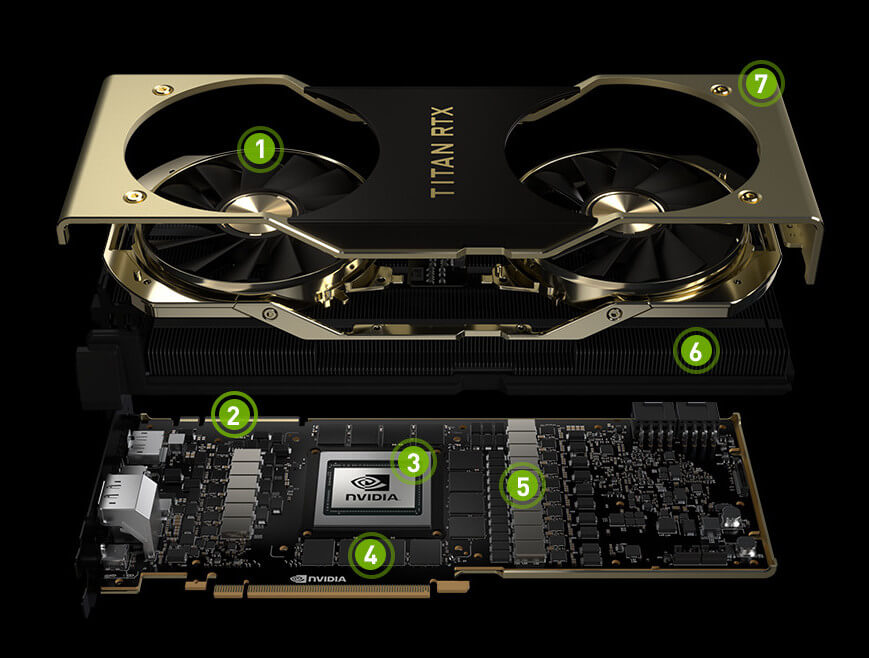

The cores are arranged in 6 GPCs, with 80 SMs. For instance, when matrices were initialized with random numbers over a dynamic range of 1E+9, and our DGEMM implementation with FP64-equivalent accuracy was run on Titan RTX with 130 TFlops on Tensor Cores, the highest achievement was approximately 980 GFlops of FP64 operation, although cublasDgemm can achieve only 539 GFlops on FP64 FPUs. Other features of the GV100 include 5,120 single-precision CUDA cores, 2,560 double-precision FP64 cores, and 640 Tensor cores, which can offer massive performance improvements in Deep Learning workloads, to the tune of up to 110TFLOPs. The 12GB of HBM2 memory on-board the GV100 is linked to the GPU via a 3072-bit interface and offers up 652.8 GB/s of peak bandwidth, which is about 100GB/s more than a TITAN Xp.

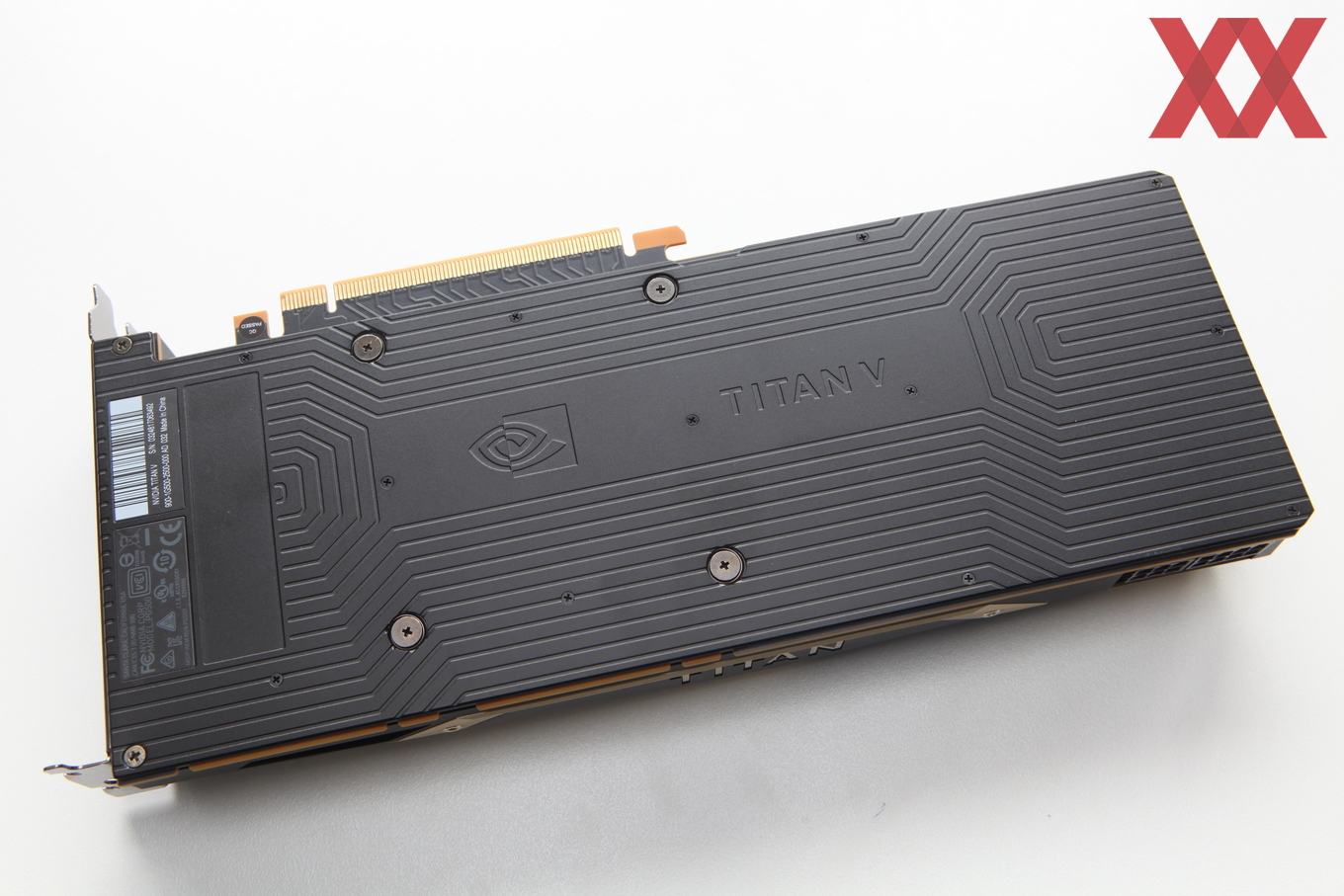

The GPU is manufactured at 12nm, which allows NVIDIA to pack in a huge number of transistors – the GV100 has nearly double the number of transistors as the 16nm GP102.Īt its default clocks, the TITAN V offers a peak texture fillrate of 384 GigaTexels/s, which is only slightly higher than a TITAN Xp. Nvidia Titan V 12GB HBM2 Cards also in excellent condition Good for designers, AI, Rendering, FP64. Even if "Milan" doubles the FP64 compute power of "Rome", there will be around 17.6 TeraFLOPs of FP64 performance for the GPU.The massive, 21.1B transistor GV100 GPU powering the TITAN V has a base clock of 1,200MHz and a boost clock of 1,455MHz. Buy Nvdia Titan V 12GB HBM2 in Kuala Lumpur,Malaysia. If we take for a fact that Milan boosts FP64 performance by 25% compared to Rome, then the math shows that the 256 GPUs that will be delivered in the second phase of Big Red 200 deployment will feature up to 18 TeraFLOPs of FP64 compute performance. Considering the configuration of a node that contains one next-generation AMD "Milan" 64 core CPU, and four of NVIDIA's "Ampere" GPUs alongside it. With a total of 8 PetaFLOPs planned to be achieved by the Big Red 200, that leaves just a bit under 5 PetaFLOPs to be had using GPU+CPU enabled system. Overall in good condition, but could have light scratches / scuffs. (fp32 ) ÷ 32 (fp64 ) gflops (gpu ) × (tc ) × 2 x 64 ÷ 1000 (fp16 ) gflops. Tested upon arrival at our location and also after customization. Refurbished: Pulled from corporate environment in working condition. Ships in original manufacturer packaging. These CPUs provide 3.15 PetaFLOPs of combined FP64 performance. New: Purchased new from a HP authorized distributor. Double-precision (FP64) used to be standard fare on the earlier TITANs, but today, you’ll need the Volta-based TITAN V for unlocked performance (6.1 TFLOPS), or AMD’s Radeon VII for partially unlocked performance (3.4 TFLOPS). The first phase is the deployment of 672 dual-socket nodes powered by AMD's EPYC 7742 "Rome" processors.

#TITAN V FP64 FULL#

It delivers the power of Tensor Cores to HPC applications, accelerating matrix math in full FP64 precision. We refer to this new capability as Double-Precision Tensor Cores. "With Big Red 200 supercomputer being based on Cray's Shasta supercomputer building block, it is being deployed in two phases. As a result, the A100 crunches FP64 math faster than other chips with less work, saving not only time and power but precious memory and I/O bandwidth as well.

Most Quadro cards do as well, though there have been some of the top-end models that had good FP64. A quick run through SiSoftware’s Sandra GPGPU Arithmetic. Remember when RV770 was the first TeraFlop performer? The original Titan had good performance there too, for its time, but later Titans were more like the GeForce cards: they had crippled FP64. Titan V’s GV100 processor is better in the HPC space thanks to 6.9 TFLOPS peak FP64 performance (half of its single-precision rate).

0 kommentar(er)

0 kommentar(er)